The widespread adoption of Large Language Models (LLMs) in real-world applications has been evident in recent years. However, these models are typically limited in their performance on higher-level tasks, such as learning to use external tools like APIs.

Previous work has attempted to construct instruction tuning data for tool use, but these efforts have been hindered by their failure to fully stimulate tool-use capabilities. They are also constrained by limitations such as a narrow range of APIs, constrained scenarios, and inferior planning and reasoning.

To address this gap, in a new paper ToolLLM: Facilitating Large Language Models to Master 16000+ Real-world APIs, a research team from Tsinghua University, ModelBest Inc., Renmin University of China, Yale University, Tencent Inc. and Zhihu Inc. presents ToolLLM, a general tool-use framework that demonstrates a compelling capability to master 16464 real-world RESTful APIs.

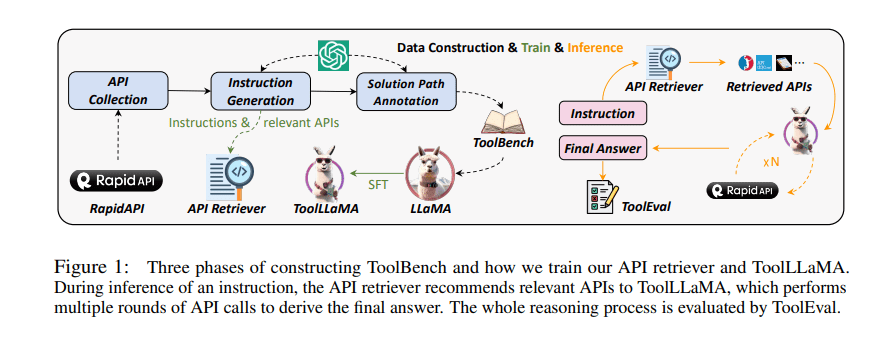

The team starts by collecting a high-quality instruction-tuning dataset ToolBench, the construction procedure entails three phases: 1) API Collection, the team collected 16464 REST APIs from RapidAPI spanning 49 categories such as social media, e-commerce, and weather. 2) Instruction Generation: they sampled APIs from the whole collected set and prompt ChatGPT to generate diverse instructions for both single-tool and multi-tool scenarios. 3) Solution Path Annotation: they annotated high-quality responses to these instructions. And to make data collection inefficient, they introduce a novel depth-first search-based decision tree (DFSDT) to boost the planning and reasoning ability of LLMs.

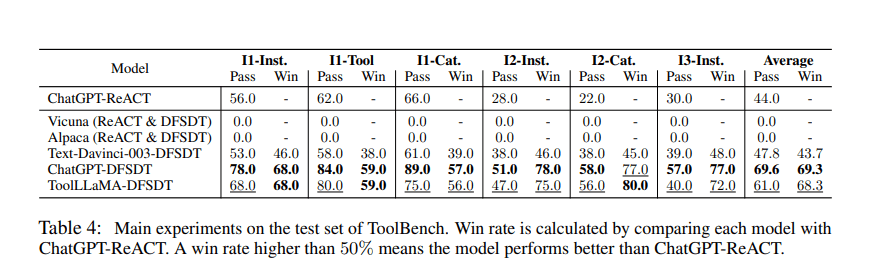

The researchers further fine-tuned LLaMA on ToolBench and obtain ToolLLaMA. They also build an automatic evaluator, ToolEval, to access ToolLLaMA. Based on the experiment results, the team observed that ToolLLaMA significantly outperforms the conventional method for tool use, while demonstrating its impressive tool-use capabilities within LLMs and its capability to master unseen APIs.

Overall, this work demonstrates the tool-use capabilities within LLMs, and the team believes their work paves the way for the research in the intersection of instruction tuning and tool use for LLMs.

The codes, trained models, and demo are publicly available at https://github.com/OpenBMB/ToolBench. The paper ToolLLM: Facilitating Large Language Models to Master 16000+ Real-world APIs on arXiv.

Author: Hecate He | Editor: Chain Zhang

We know you don’t want to miss any news or research breakthroughs. Subscribe to our popular newsletter Synced Global AI Weekly to get weekly AI updates.

Es increíble la expansión de estos conceptos a enorme velocidad. Felicitaciones por la publicación desde nuestra web con los mejores bancos en Estados Unidos

Hello all, the expertise I demonstrated in writing my paper was outstanding. The team demonstrated a deep understanding of the subject in dissertation writing service https://essaypro.com/custom-dissertation-writing-service and presented it clearly and concisely. The research is extensive and the analysis is spot on. Additionally, the writing style is impeccable and demonstrates a high level of ability.

Hi, I want to recommend you a slots site. I’ve been using this site for a long time, it’s called melbet. They have a large selection of slot games and good betting odds. The website and app work very well. There are bonuses for newcomers and many special offers for those who already play. I’m sure you will enjoy it, have fun)