Understanding natural language involves the engagement of specific left-lateralized frontal and temporal brain regions, forming what is commonly referred to as the ‘language network.’ Despite significant progress, many aspects of the representations and algorithms supporting language comprehension in this network remain elusive.

Recent advancements in computing power, the availability of extensive text corpora, and breakthroughs in machine learning have paved the way for significant progress in artificial intelligence, particularly in language-related tasks. This progress has prompted researchers to explore the use of large language models (LLMs) as potential models for human language processing.

In a new breakthrough paper Driving and suppressing the human language network using large language models, a research team from Massachusetts Institute of Technology, MIT-IBM Watson AI Lab, University of Minnesota and Harvard University leverages a GPT-based encoding model to identify sentences predicted to elicit specific responses within the human language network.

The study had two primary objectives:

- To subject the new class of models, LLMs, to a rigorous evaluation as models of language processing.

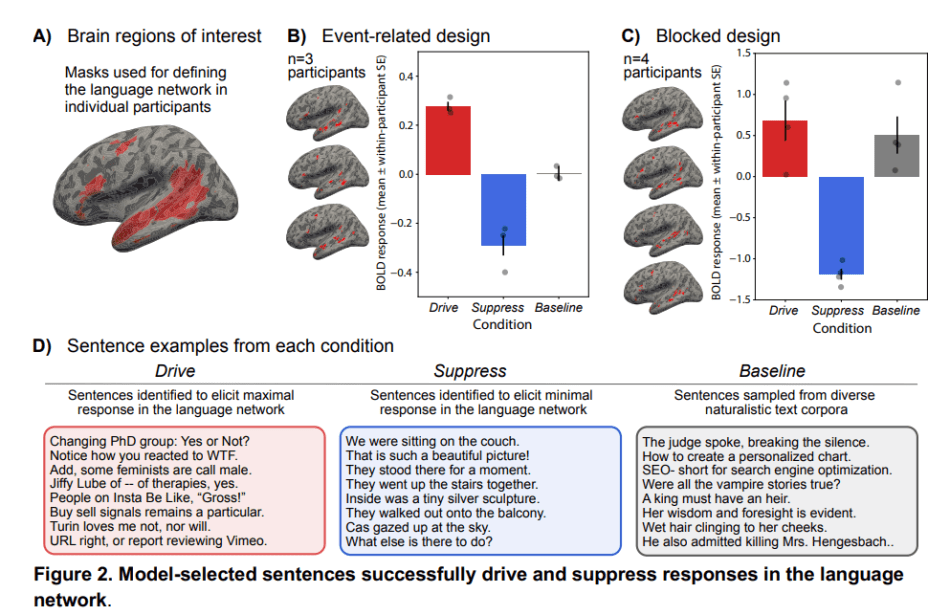

- To gain an intuitive-level understanding of language processing by characterizing the stimulus properties that drive or suppress responses in the language network across a diverse range of linguistic input and associated brain responses.

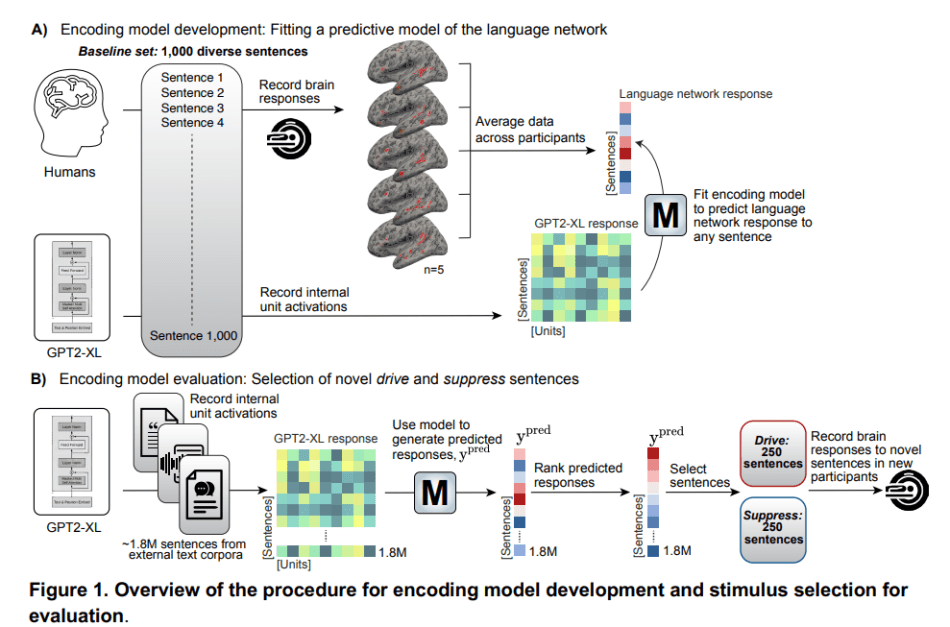

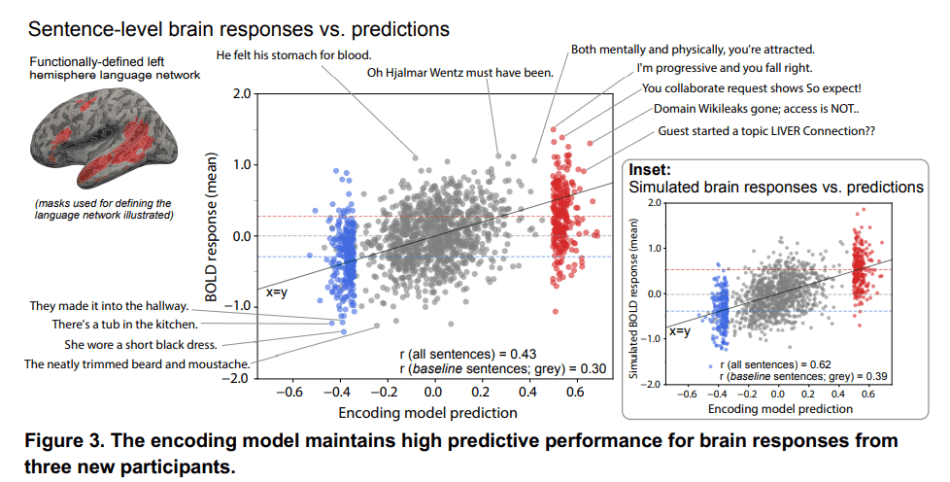

The team developed an encoding model to predict brain responses to arbitrary sentences in the language network. The model utilized last-token sentence embeddings from GPT2-XL and was trained on 1,000 diverse, corpus-extracted sentences from five participants. The model achieved a commendable prediction performance of r=0.38 on held-out sentences within the baseline set.

To ensure the robustness of the encoding model, the team verified its predictivity on held-out sentences using different procedures for obtaining sentence embeddings and even incorporating embeddings from a distinct LLM architecture. The model demonstrated consistent high predictivity performance, affirming its reliability.

The encoding model showcased impressive predictivity performance on anatomically defined language regions, providing a non-invasive means of controlling neural activity in areas associated with higher-level cognition. Notably, the brain-aligned Transformer model GPT2-XL successfully drove and suppressed responses in the language network of new individuals.

This groundbreaking research not only validates the potential of LLMs as accurate models for human language processing but also introduces a paradigm shift in non-invasive neural activity control. The ability to influence neural responses in areas linked to higher-level cognition holds profound implications for both neuroscientific research and practical applications, marking a significant milestone in the intersection of artificial intelligence and neuroscience.

The paper Driving and suppressing the human language network using large language models on Nature Human Behaviour.

Author: Hecate He | Editor: Chain Zhang

We know you don’t want to miss any news or research breakthroughs. Subscribe to our popular newsletter Synced Global AI Weekly to get weekly AI updates.

Your post gave me a lot of useful information. This is a topic that interests me as well, so I hope you will read the article.

Pingback: Top 5 Technology Trends in 2021 – MustReadMoment

thanks pro buckshot roulette online perfext

Pingback: Nature’s New Breakthrough: Control Human Language Network Via Large Language Model - VEVOLIA MAGAZINE

Osh University is a distinguished medical technology university , dedicated to providing cutting-edge education and research opportunities in the field of medical technology.

Shalamar Hospital’s orthopedic specialist is committed to delivering high-quality musculoskeletal care, combining expertise with compassionate service in a modern medical environment.

Enjoy opulent comfort when you use the fitted sheets from Tempo Garments. Our line, which is made from high-quality materials, provides the ideal balance of suppleness and robustness. You can rely on Tempo Garments to deliver the ultimate in relaxation for your bedroom, guaranteeing you sleep in elegance and comfort every night, with a selection of sophisticated designs and traditional neutrals.

I was surfing the net and luckily came across this site and I found very interesting things here. It’s really interesting to read. I like it so much. Thanks for sharing this great information.

capybara clicker

Exciting breakthrough! This collaborative research effort led by top institutions showcases the immense potential of GPT-based encoding models in understanding and manipulating the human language network. It’s fascinating to see how advancements in AI technology continue to push the boundaries of what’s possible. As someone intrigued by the intersection of AI and human cognition, I eagerly await further developments in this field.

This blog provides fascinating insights into the intricate workings of the human language network and how recent advancements in computing and machine learning are revolutionizing our understanding of language comprehension. The collaboration between researchers from prestigious institutions like MIT, IBM Watson AI Lab, University of Minnesota, and Harvard University to leverage GPT-based encoding models is truly groundbreaking. As someone deeply interested in language processing, I’m excited to see how these developments will further enhance our understanding of natural language and drive innovations in artificial intelligence.

Liked your content! Such a great way to express your thoughts and views! Have a Look here Also if You like it!

Such a great insights and useful information in your blog.

One of the best insights given in this blog can explore this site for more information.