Generative AI and Large Language Models (LLMs) have achieved remarkable success in Natural Language Processing (NLP) tasks, and their evolution now extends to performing actions beyond text comprehension. This marks a significant shift in the pursuit of Artificial General Intelligence. Evaluating the gaming prowess of LLMs, particularly in the context of Pokemon battles, serves as an effective benchmark for assessing their game-playing capabilities.

In a new paper PokéLLMon: A Human-Parity Agent for Pokémon Battles with Large Language Models, a Georgia Institute of Technology research team introduces PokéLLMon, a pioneering LLM-embodied agent demonstrating human-competent performance in tactical battle games.

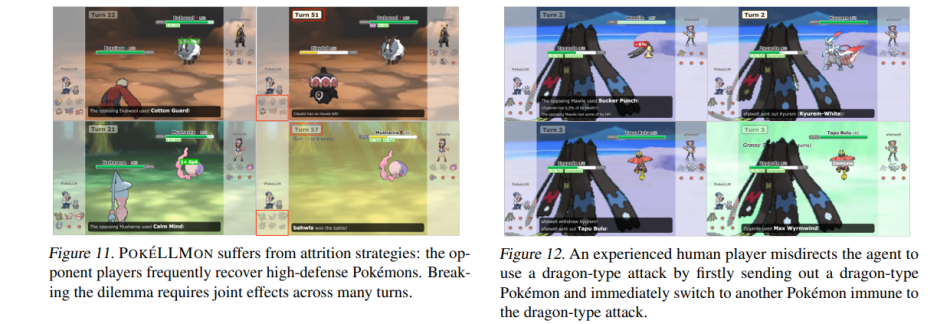

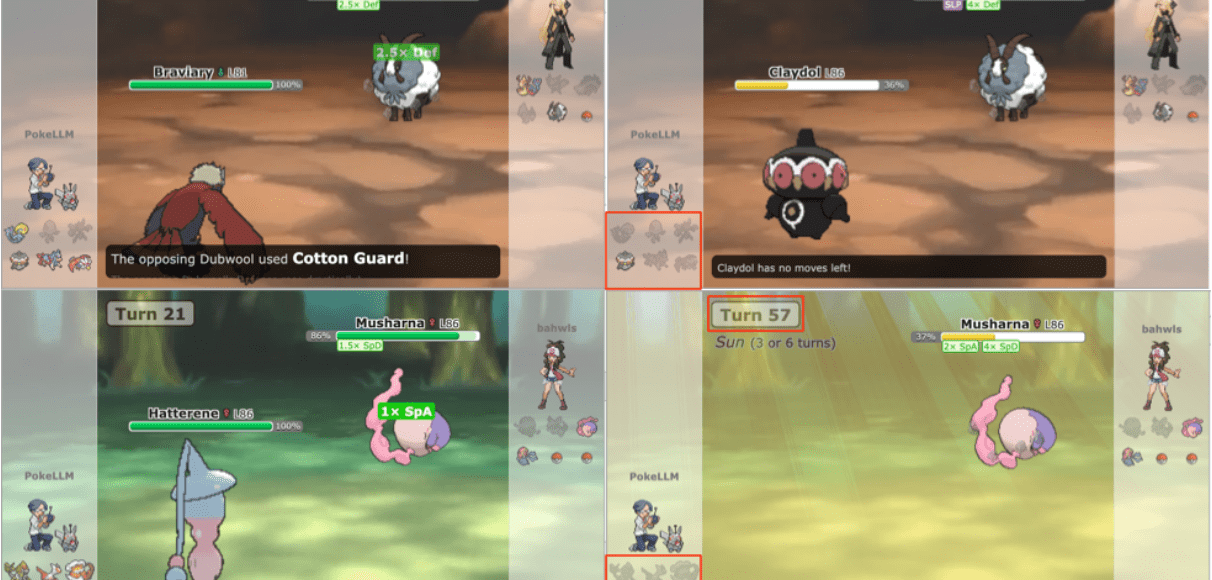

The primary goal of the paper is to develop an LLM-embodied agent that replicates the decision-making processes of a human player engaged in Pokemon battles. The team aims to dissect the key factors contributing to the agent’s proficiency while scrutinizing its strengths and weaknesses in battles against human opponents.

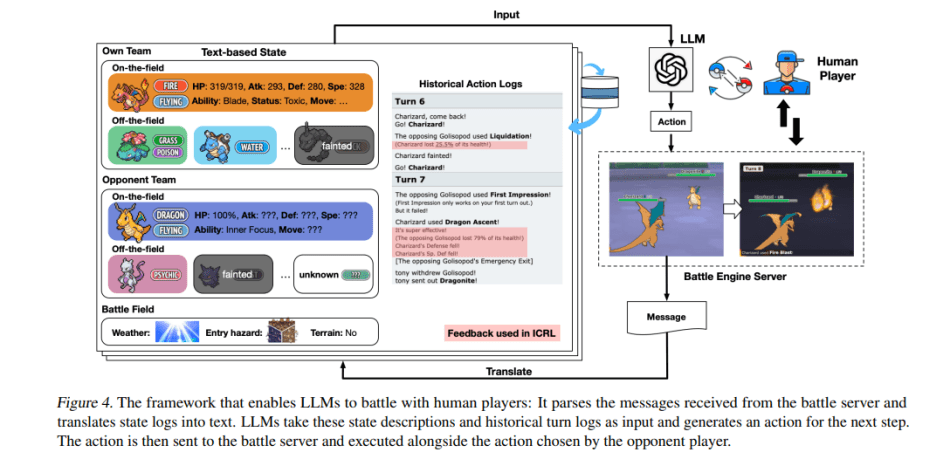

The overall framework of POKE´LLMON

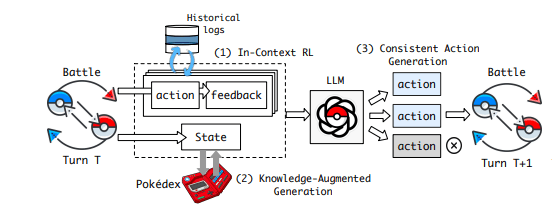

The research team initiates their work by creating an environment capable of parsing and translating the battle state into textual descriptions. This environment facilitates the autonomous playing of Pokemon battles by LLMs. PokéLLMon’s framework encompasses three core strategies:

- In-Context Reinforcement Learning (ICRL): Utilizing instant feedback from battles to iteratively refine generation.

- Knowledge Augmentation Generator (KAG): Accessing external knowledge to combat hallucination and act promptly.

- Consistent Action Generation: Mitigating the panic switching problem by ensuring coherent action selection.

During each turn, PokéLLMon refines its policy using prior actions and corresponding text-based feedback. It integrates external knowledge, such as type advantages/weaknesses and move/ability effects, to augment current state information. Generating multiple actions independently, PokéLLMon selects the most consistent ones for execution.

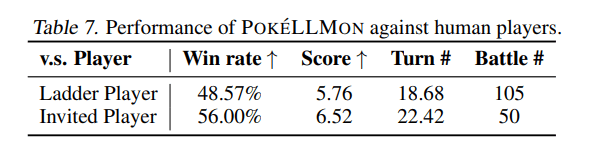

The researchers identify that agents with chain-of-thought experiences panic when confronted by formidable opponents. Consistent action generation effectively mitigates this issue. Online battles showcase PokéLLMon’s humanlike battle abilities, achieving a 49% win rate in Ladder competitions and an impressive 56% win rate in invited battles.

The team underscores the versatility of PokéLLMon’s architecture, emphasizing its adaptability for designing LLM-embodied agents in various games. Addressing issues of hallucination and action inconsistency, PokéLLMon stands as the first LLM-embodied agent with human-parity performance in tactical battle games, according to the team’s current knowledge.

The implementation and playable battle logs are available at the project’s GitHub. The paper PokéLLMon: A Human-Parity Agent for Pokémon Battles with Large Language Models is on arXiv.

Author: Hecate He | Editor: Chain Zhang

We know you don’t want to miss any news or research breakthroughs. Subscribe to our popular newsletter Synced Global AI Weekly to get weekly AI updates.

Pingback: PokéLLMon Triumph: Georgia Tech Unleashes The First LLM Agent Mastering Human-Level Skills In Pokemon Battles - VEVOLIA MAGAZINE