Text embedding, the process of converting text into numerical representations, stands as a crucial component in natural language processing (NLP) tasks. It facilitates the comprehension of meaning and context (semantic relationships) within data, as well as the discovery of intricate patterns and relationships.

However, the accessibility of high-performing embedding models with a context length surpassing 2048 has been limited since they are closed-source. Moreover, many of the leading open-source long context embedding models suffer from substantial inference requirements, rendering them impractical for various engineering applications.

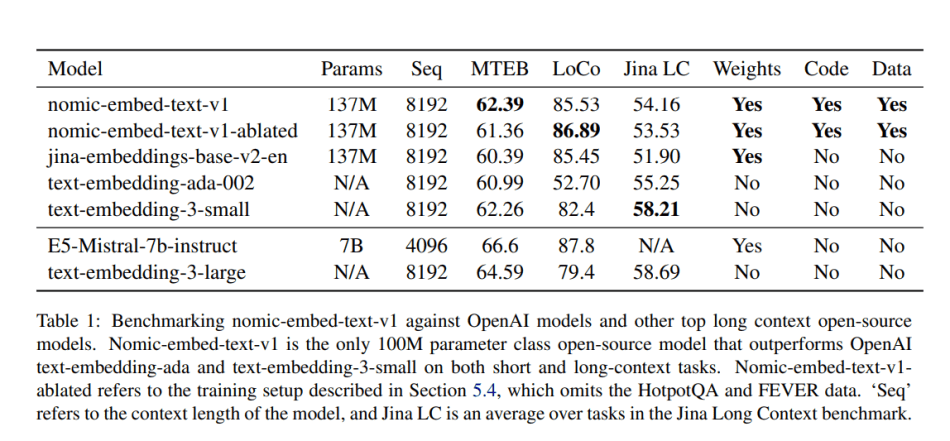

In a new paper Nomic Embed: Training a Reproducible Long Context Text Embedder, a Nomic AI research team introduces nomic-embed-text-v1, which marks the inception of the first fully reproducible, open-source, open-weights, open-data text embedding model, capable of handling an extensive context length of 8192 in English. Impressively, nomic-embed-text-v1 surpasses both OpenAI Ada-002 and OpenAI text-embedding-3-small in both short and long-context tasks.

This technical report meticulously outlines the training methodology behind nomic-embed-text-v1. To craft a model capable of accommodating lengthy sequences, the researchers began by adapting BERT, focusing on achieving an 8192 sequence length. The adaptation involved a series of architectural modifications and optimizations to the BERT base:

- Substituting absolute positional embeddings with rotary positional embeddings.

- Implementing SwiGLU activation in lieu of GeLU.

- Incorporating Flash Attention.

- Setting Dropout to 0.

- Ensuring the vocabulary size is a multiple of 64.

For Masked Language Modeling pretraining, the team utilized BooksCorpus and a Wikipedia dump from 2023 to train a BERT model tailored for long contexts, dubbed nomic-bert-2048.

During the Unsupervised Contrastive Pretraining phase, the team leveraged extensive collections of publicly available data to form pairs, amassing a total of 470 million pairs from 29 datasets. They opted for the gte-base model instead of the all-MiniLM-L6-v2 model, sampling pairs from individual data sources to discourage the model from learning source-specific shortcuts.

Supervised Contrastive Fine-tuning was conducted across various datasets, including MSMarco, NQ, NLI, FEVER, and HotpotQA. Training encompassed the released training sets from the BEIR benchmark, with additional negative mining for retrieval datasets, if not already conducted using gte-base.

In their empirical evaluation, nomic-bert-2048 was assessed on the GLUE benchmark, while nomic-embed-text-v1 underwent evaluation on MTEB, Jina’s Long Context Benchmark, and LoCo. Notably, nomic-embed-text-v1 surpassed text-embedding-ada-002 and jina-embeddings-v2-base-en across various benchmarks. In long context assessments, particularly LoCo and Jina’s Long Context Benchmark, nomic-embed-text-v1 consistently outperformed jina-embeddings-v2-base-en. Additionally, it outperformed text-embedding-ada002 on LoCo and on two out of four datasets in Jina’s Long Context Benchmark.

The paper Nomic Embed: Training a Reproducible Long Context Text EmbedderarXiv.

Author: Hecate He | Editor: Chain Zhang

We know you don’t want to miss any news or research breakthroughs. Subscribe to our popular newsletter Synced Global AI Weekly to get weekly AI updates.

Pingback: Nomic Embed: The Inaugural Open-Source Long Text Embedding Model Outshining OpenAI’s Finest

Pingback: Nomic Embed: The Inaugural Open-Source Long Text Embedding Model Outshining OpenAI’s Finest - GPT AI News

Osh University, the leading kyrgyzstan medical college , is dedicated to producing competent healthcare professionals. Its reputation for high-quality education and a world-class faculty makes it the ideal choice for medical students.

When it comes to heart health, Shalamar Hospital proudly offers the expertise of the best cardiologist in Pakistan , ensuring the highest standards of cardiac care.

Unveil a world of charm with Tempo Garmentsshirts for girls. Our collection blends trendsetting styles with comfort, offering a spectrum of options for every occasion. Elevate her wardrobe with Tempo Garments' distinctive designs, ensuring a perfect blend of fashion and fun.

vdgsdgsdg

Increase your website’s visibility and easily rank high in search engines with crowd marketing solutions from LinkBuilder