In the realm of deep learning, much emphasis has been placed on deciphering digital media files that resonate with human understanding. Yet, amidst this pursuit, the ubiquitous presence of native binary data in the digital landscape often goes unnoticed.

Bytes, the elemental units of digital information, form the bedrock of all data, devices, and software, permeating everything from computer processors to the operating systems of everyday electronics. Thus, the potential for training models geared towards next-byte prediction heralds a transformative paradigm shift in deep learning, promising a comprehensive comprehension and emulation of all digital phenomena.

In a new paper Beyond Language Models: Byte Models are Digital World Simulators, a research team from Microsoft Research Asia, Central Conservatory of Music, and Tsinghua University introduces bGPT, a pioneering model engineered explicitly for processing binary data and simulating the digital world through next-byte prediction. bGPT transcends conventional boundaries of deep learning by directly engaging with and manipulating binary data, fostering a deeper and more holistic understanding of the digital realm.

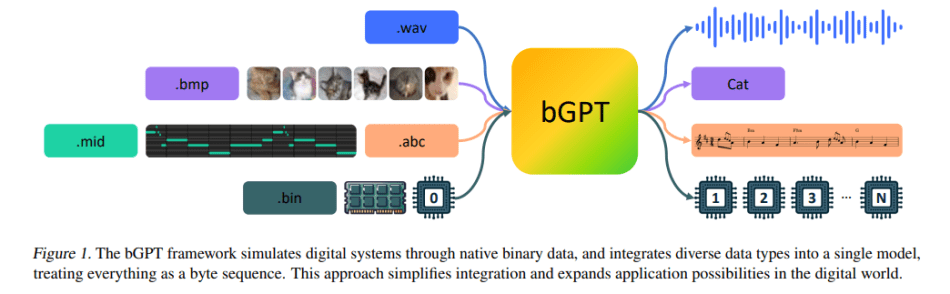

Operating at the byte level not only empowers models to discern intricate patterns within digital systems but also furnishes a unified methodology for amalgamating diverse data types within a singular framework. Inspired by this vision, the bGPT framework endeavors to simulate digital systems by harnessing native binary data and seamlessly integrating disparate data modalities into a cohesive byte sequence. This approach not only streamlines integration processes but also broadens the horizons of application within the digital domain.

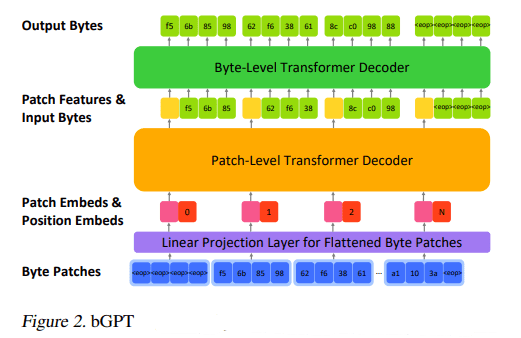

The architectural backbone of bGPT revolves around a hierarchical Transformer architecture, comprising three pivotal components: a linear projection layer, a patch-level decoder, and a byte-level decoder. By segmenting byte sequences into patches, predicting next patch features with a patch-level decoder, and subsequently reconstructing bytes within patches using these features via a byte-level decoder, bGPT achieves remarkable efficacy in its operations.

The merits of bGPT are twofold: firstly, in its prowess in interpreting digital systems, whereby training on byte sequences enables the model to discern and predict the nuances of digital systems, facilitating the simulation and diagnosis of algorithmic or hardware behavior. Secondly, in its unified modeling approach, bGPT seamlessly incorporates diverse data types into a singular framework, treating each element as a byte sequence, thereby simplifying modeling processes and facilitating the integration of heterogeneous data sources.

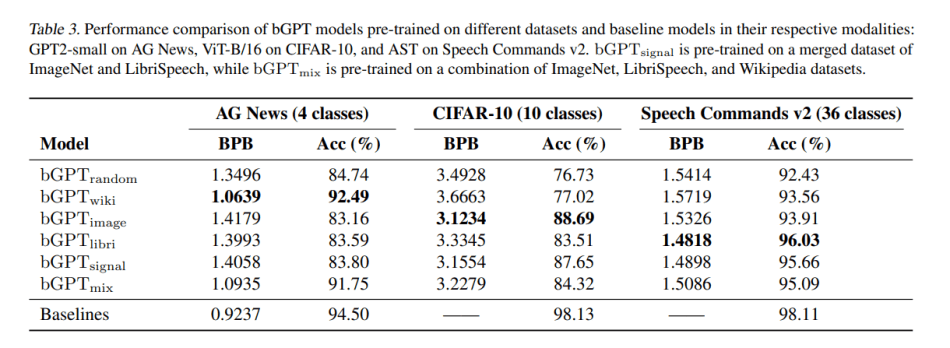

Empirical evidence underscores the efficacy of bGPT across a spectrum of modalities, including text, audio, and images, while also unlocking novel avenues for predicting, simulating, and diagnosing algorithmic or hardware behavior. Notably, bGPT exhibits exemplary performance in replicating the process of converting symbolic music data, achieving an impressively low error rate of 0.0011 bits per byte in converting ABC notation to MIDI format. Furthermore, bGPT demonstrates remarkable acumen in simulating CPU behavior, boasting an accuracy exceeding 99.99% in executing various operations.

In summary, the present study underscores the efficacy of bGPT in modeling digital media data, showcasing its prowess in facilitating modality-agnostic knowledge transfer. Through its comparative performance vis-a-vis specialized models across diverse datasets sans modality-specific designs, and its prowess in data conversion and CPU state modeling, bGPT emerges as a potent tool for simulating a plethora of algorithms and hardware configurations, thereby heralding a new era in deep learning within the digital landscape.

The code is available on project’s GitHub. The paper Beyond Language Models: Byte Models are Digital World Simulators is on arXiv.

Author: Hecate He | Editor: Chain Zhang

We know you don’t want to miss any news or research breakthroughs. Subscribe to our popular newsletter Synced Global AI Weekly to get weekly AI updates.

Pingback: Transcend The Boundaries of Language Fashions: bGPT Permits Deeper Understanding By Byte Prediction - TechTonicTales