In recent years, there has been a remarkable surge in the development of generative AI, showcasing the potential of models to create novel and imaginative content. While strides have been made in various domains, the realm of video generation stands as a promising frontier.

Recent advancements suggest that scaling up these models could further enhance their capabilities. However, there remains a significant gap between the interactive engagement offered by video generative models and the rich interactions facilitated by language tools like ChatGPT, not to mention more immersive experiences.

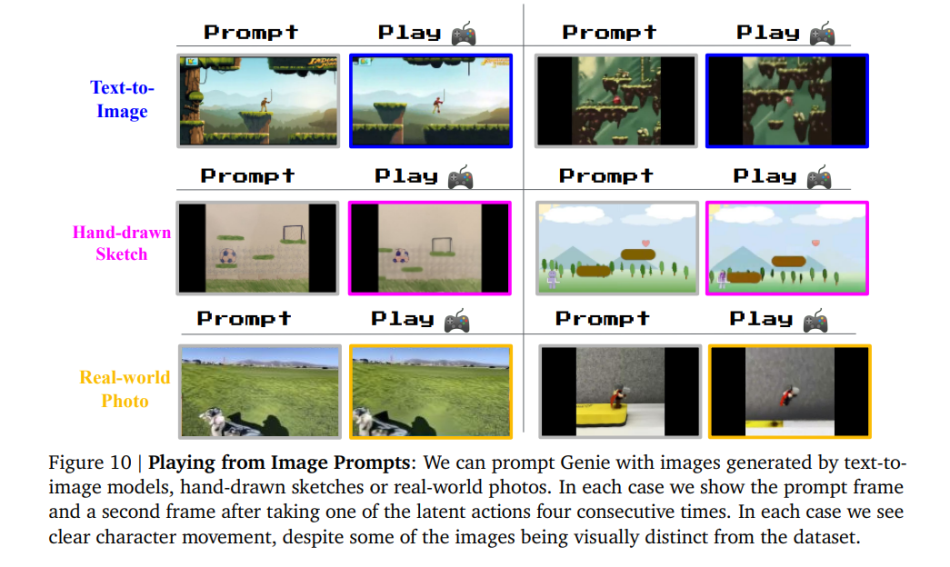

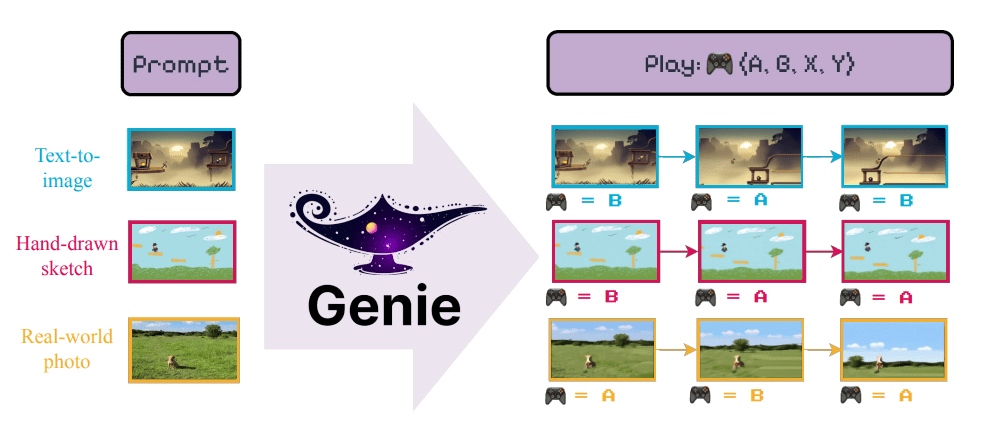

In response to this challenge, in a new paper Genie: Generative Interactive Environments, a research team from Google DeepMind and University of British Columbia presents Genie, the first generative interactive environment capable of seamlessly generating a diverse array of controllable virtual worlds based on textual prompts, synthetic images, photographs, and even sketches.

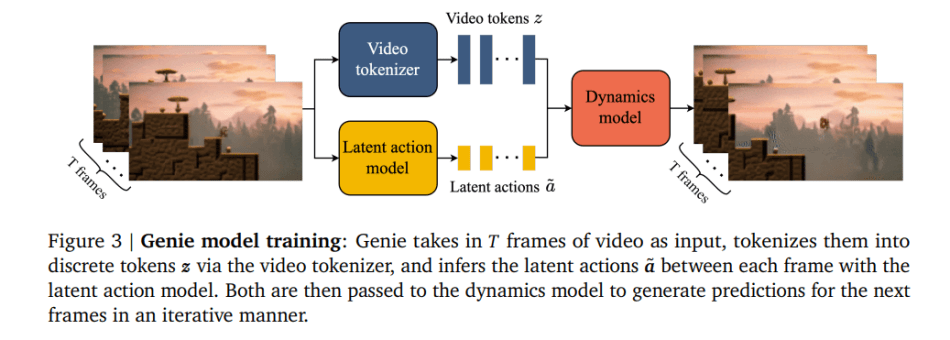

At the heart of Genie lies a fusion of cutting-edge concepts derived from state-of-the-art video generation models, with a central design principle revolving around spatiotemporal (ST) transformers. Leveraging a novel video tokenizer and a causal action model, Genie extracts latent actions to facilitate the creation of dynamic content. These latent actions, alongside video tokens, are fed into a dynamics model, which autonomously predicts subsequent frames utilizing MaskGIT.

Genie comprises three fundamental components: a latent action model, responsible for inferring the latent action between each frame pair; a video tokenizer, which translates raw video frames into discrete tokens; and a dynamics model, tasked with predicting forthcoming frames based on given latent actions and past frame tokens.

The model undergoes a two-phase training process following a conventional autoregressive video generation pipeline. Initially, the team focuses on training the video tokenizer, which subsequently aids the dynamics model. Subsequently, they co-train the latent action model (trained directly from pixels) alongside the dynamics model (trained on video tokens).

Empirical findings underscore Genie’s prowess in generating high-quality, controllable videos spanning diverse domains using solely video data. Moreover, the acquired latent action space enables the training of agents to emulate behaviors observed in previously unseen videos, paving the way for the development of versatile, generalist agents in the future.

The paper Genie: Generative Interactive Environments is on arXiv.

Author: Hecate He | Editor: Chain Zhang

We know you don’t want to miss any news or research breakthroughs. Subscribe to our popular newsletter Synced Global AI Weekly to get weekly AI updates.

Pingback: DeepMind & UBC’s Genie: A Revolutionary Leap in Generative AI for Interactive Digital Worlds

Dude, the l7 profitability like a double-edged sword, ya know? On one hand, you’ve got this beast that can hash out coins like nobody’s business, but then there’s the flip side. The cost of running this bad boy ain’t cheap, and with the crypto market being all over the place, it’s a gamble. Electricity prices are no joke either. If they spike, you’re looking at some tight margins. Plus, if something goes wrong with the machine, you’re outta luck and cash. It’s a wild ride, but if you play your cards right, it could be worth it.

In the vibrant tech hub of San Francisco, software development thrives amidst a landscape of innovation and opportunity. From the bustling startups of Silicon Valley to the established giants of the tech industry, the city attracts top talent and fosters a culture of creativity and ingenuity. With its dynamic ecosystem, San Francisco is home to some of the world’s leading software development companies, shaping the future of technology. For those seeking insight into the top players in this competitive field, Intellectsoft’s blog offers a comprehensive guide to the best software development companies in San Francisco. Explore the https://www.intellectsoft.net/blog/top-software-development-companies-in-san-francisco/ for a closer look at the movers and shakers driving innovation in this dynamic industry.