AlphaFold2 (AF2), crafted by DeepMind, stands as a beacon in the realm of artificial intelligence (AI), boasting the remarkable ability to predict the three-dimensional (3D) structures of proteins from amino acid sequences with unprecedented atomic-level precision. While lauded as a revolutionary advancement in protein folding, its training regimen has long been hampered by its laborious nature, failing to reap significant benefits from the scaling up of computational resources.

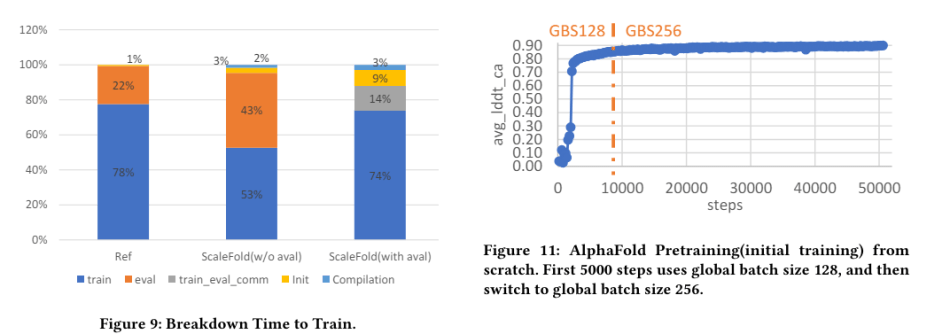

In a new paper ScaleFold: Reducing AlphaFold Initial Training Time to 10 Hours, a team of researchers from NVIDIA presents ScaleFold, a novel and scalable training methodology tailored for the AlphaFold model. Notably, this method accomplishes the OpenFold partial training task in a mere 7.51 minutes—over six times faster than the benchmark baseline—ultimately slashing the AlphaFold’s initial training time to a remarkable 10 hours.

The team outlines their key contributions as follows:

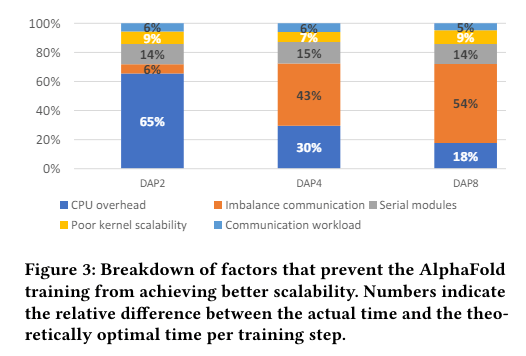

- Identification of Scaling Bottlenecks: Pinpointing the primary factors impeding AlphaFold’s scalability to increased computational resources.

- Introduction of ScaleFold: Introducing a systematic and scalable training approach specifically designed for the AlphaFold model.

- Empirical Demonstration of Scalability: Establishing new performance benchmarks for AlphaFold pretraining and the MLPef HPC benchmark, underscoring ScaleFold’s scalability and efficacy.

The team embarked on a comprehensive analysis of the AlphaFold training process, uncovering critical obstacles hindering scalability. These hurdles included intensive yet inefficient communication during distributed training, stemming from communication overheads and imbalances exacerbated by sluggish workers. Moreover, computational resources were underutilized due to local CPU overheads, non-parallelizable workloads, and suboptimal kernel scalability.

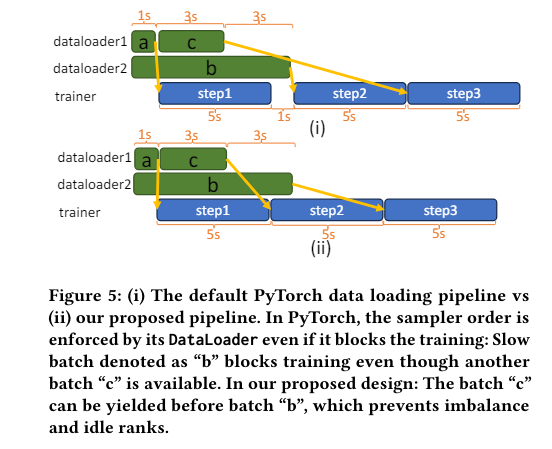

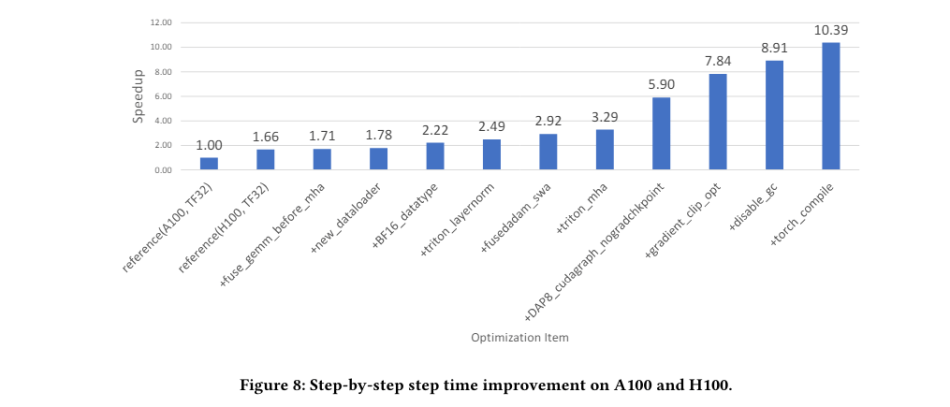

To surmount these challenges, the researchers devised a suite of systematic optimizations. They introduced a novel non-blocking data pipeline to address slow-worker issues and implemented fine-grained optimizations, such as leveraging CUDA Graphs to trace training and minimize overheads, resulting in significant improvements in communication efficiency.

Furthermore, the team identified essential computation patterns within AlphaFold training and developed dedicated Triton kernels for each, consolidating fragmented computations across the model and meticulously tuning kernel configurations for varying workload sizes and hardware architectures. This amalgamation of optimizations gave birth to ScaleFold.

Empirical results underscored ScaleFold’s success in overcoming scalability hurdles, as it facilitated AlphaFold training on 2080 NVIDIA H100 GPUs, a substantial leap from previous limitations of 512. In the MLPef HPC v3.0 benchmark, ScaleFold completed the OpenFold partial training task in a mere 7.51 minutes—six times faster than the benchmark baseline. Notably, for training the AlphaFold model from scratch, ScaleFold achieved pretraining in just 10 hours, setting a new record compared to prior methodologies.

The researchers envision their work as a boon to the HPC and bioinformatics research communities, offering an effective framework for scaling deep-learning-based computational methods to tackle complex protein folding problems. Additionally, they hope that the workload profiling and optimization methodologies employed in this study will illuminate future machine learning system designs and implementations.

The paper ScaleFold: Reducing AlphaFold Initial Training Time to 10 Hours is on arXiv.

Author: Hecate He | Editor: Chain Zhang

We know you don’t want to miss any news or research breakthroughs. Subscribe to our popular newsletter Synced Global AI Weekly to get weekly AI updates.

Are you craving a grooming experience that’s not just a haircut, but an indulgence in style and sophistication? Premium Barbershop invites you to immerse yourself in an oasis of grooming luxury. Our seasoned barbers are masters of their craft, delivering expertly crafted haircuts, beard trims, and hot towel shaves tailored precisely to your preferences. But it’s not just about the services; it’s about the experience. From the moment you enter our doors, you’ll be enveloped in a warm ambiance that exudes comfort and class. Are you ready to elevate your grooming routine to a whole new level of excellence? Click here to book your appointment and discover the unparalleled indulgence awaiting you at Premium Barbershop.