In various domains, Diffusion Models (DMs) have emerged as groundbreaking tools, offering an unparalleled blend of realism and diversity while ensuring stable training. However, their sequential denoising process poses significant challenges, being time-consuming and costly.

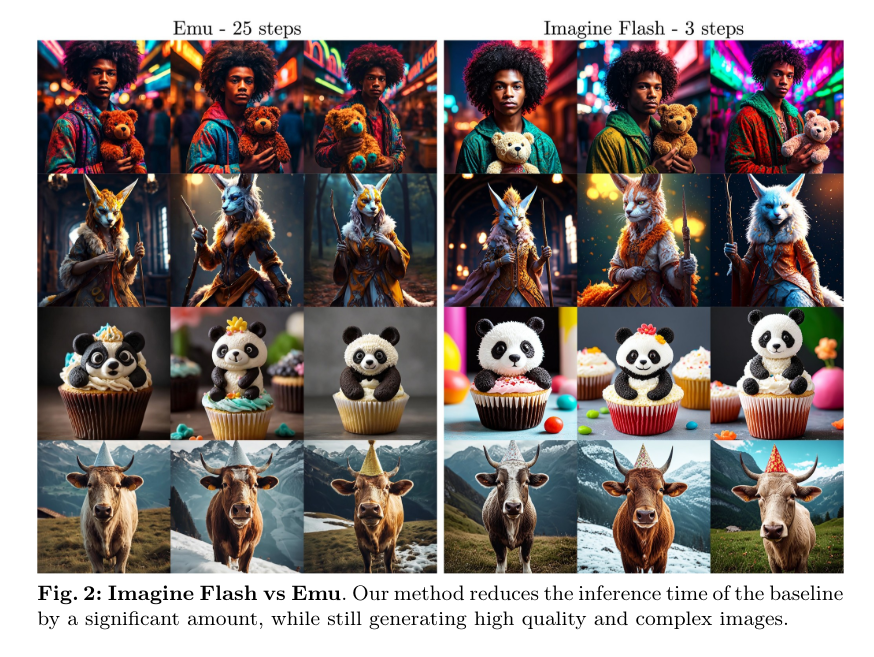

In a new paper Imagine Flash: Accelerating Emu Diffusion Models with Backward Distillation, a Meta GenAI research team introduces an innovative distillation framework aimed at enabling high-fidelity, diverse sample generation within just one to three steps. This framework surpasses existing competitors in both quantitative metrics and human evaluations.

The paper presents a novel distillation framework designed to enhance the student model along its own diffusion paths. Firstly, the team introduces Backward Distillation, a process that calibrates the student model on its upstream backward trajectory. This reduces the gap between training and inference distributions, ensuring zero data leakage across all time steps.

Secondly, they propose a Shifted Reconstruction Loss, which dynamically adjusts knowledge transfer from the teacher model. This loss distills global structural information at high time steps, while emphasizing rendering fine-grained details and high-frequency components at lower time steps. This adaptive approach enables the student to effectively emulate the teacher’s generation process at different stages of the diffusion trajectory.

Lastly, Noise Correction is introduced as an inference-time modification enhancing sample quality by addressing singularities present in noise prediction models during the initial sampling step. This training-free technique mitigates degradation of contrast and color intensity, typical when operating with an extremely low number of denoising steps.

By synergistically combining these three novel components, the researchers apply their distillation framework to a baseline diffusion model, Emu, resulting in Imagine Flash. This achievement demonstrates high-quality generation in the extremely low-step regime without compromising sample quality or conditioning fidelity. Extensive experiments and human evaluations further validate the effectiveness of their approach, showcasing favorable trade-offs between sampling efficiency and generation quality across various tasks and modalities.

Overall, this work opens the door to ultra-efficient generative modeling. Imagine Flash, by enabling on-the-fly high-fidelity generation, unlocks new possibilities for real-time creative workflows and interactive media experiences.

The paper Imagine Flash: Accelerating Emu Diffusion Models with Backward Distillation is on arXiv.

Author: Hecate He | Editor: Chain Zhang

We know you don’t want to miss any news or research breakthroughs. Subscribe to our popular newsletter Synced Global AI Weekly to get weekly AI updates.

Pingback: Meta’s Imagine Flash: Pioneering Ultra-Fast and High-Fidelity Images Generation Within 3 Steps -