Large language models (LLMs) have showcased remarkable versatility by training on vast amounts of internet data, harnessing tokens that effectively integrate various text modalities including code, mathematical expressions, and multiple natural languages. This achievement has piqued researchers’ curiosity about extending large-scale generative modeling to video data. However, previous efforts in this domain have often been limited to specific types of visual content, shorter durations, or videos of fixed dimensions.

In a recent technical report from OpenAI, a groundbreaking text-to-video model named Sora has been introduced. Sora stands out for its ability to generate videos and images spanning a wide range of durations, aspect ratios, and resolutions, producing up to a minute of high-definition video content.

The report primarily delves into two key aspects: firstly, the methodology employed to transform diverse visual data into a cohesive representation conducive to large-scale generative modeling; and secondly, a qualitative assessment of Sora’s capabilities and constraints. Detailed insights into the model architecture and implementation specifics are not included in this publication.

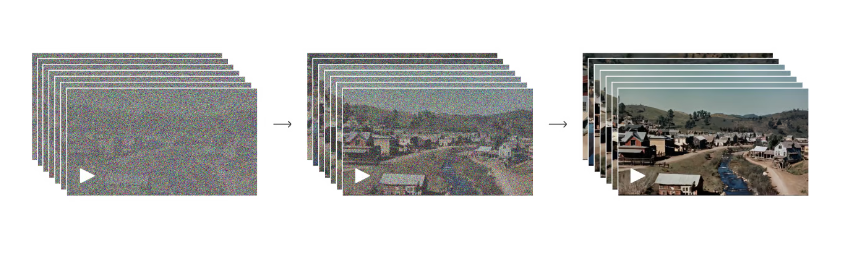

Sora operates on the principles of diffusion modeling, initiating the video generation process from a frame resembling static noise and iteratively refining it by gradually eliminating the noise over multiple steps. Building upon earlier innovations in models like DALL·E and GPT, Sora incorporates the recaptioning technique from DALL·E 3, generating highly descriptive captions for the visual training data. Consequently, the model adeptly translates textual instructions into faithfully executed actions within the generated video content.

Taking raw video data as input, Sora outputs a latent representation that undergoes temporal and spatial compression. By predicting the original “clean” patches from input noisy patches and conditioning information such as text prompts, the model is trained to effectively reconstruct the visual content.

Beyond generating videos solely from textual prompts, Sora exhibits the capability to animate static images with precision and attention to detail. Moreover, it can extend existing videos or seamlessly fill in missing frames, enhancing the fluidity and completeness of the visual content.

In essence, Sora lays the groundwork for models with a deeper understanding of and ability to simulate the real world, marking a significant milestone on the path towards achieving Artificial General Intelligence (AGI).

The technical report Video generation models as world simulators is on OpenAI.

Author: Hecate He | Editor: Chain Zhang

We know you don’t want to miss any news or research breakthroughs. Subscribe to our popular newsletter Synced Global AI Weekly to get weekly AI updates.

Impressive breakthrough! Sora’s pioneering text-to-video model, leveraging diffusion modeling and recaptioning techniques, signals a significant step towards Artificial General Intelligence (AGI). Its seamless translation of textual prompts into dynamic visual narratives and animation of static images showcases remarkable potential for AI-driven creativity.

AI development services

Legal online casinos offer players the thrill of gambling from the comfort of home. With Jackpot City real money games, players can experience the excitement of betting and winning. In Bangladesh, where traditional casinos are limited, online platforms provide accessible avenues for gaming entertainment.

Sora operates on the principles of diffusion modeling, Strands starting the video generation process from a frame resembling static noise and progressively refining it by gradually eliminating the noise over multiple steps.

“Thanks for sharing your views with us. I do agree with all your given points in this article, and it is quite impressive.

Top 15 Best Data Science course In Delhi“

Is there any prompt ew can use for tiktok videos about outwear showcasing; we own an outwear brand SAFYD where we need ad videos consists of showcasing our product. Let me know if there is any help i can find.

thank you.