Large Language Models (LLMs) have proven their mettle across a spectrum of real-world applications, ranging from language modeling to visual comprehension, and even text-to-image and text-to-video generation. Undoubtedly, LLMs stand as pivotal elements in contemporary artificial intelligence. However, alongside their groundbreaking potential, concerns regarding their safe deployment loom large.

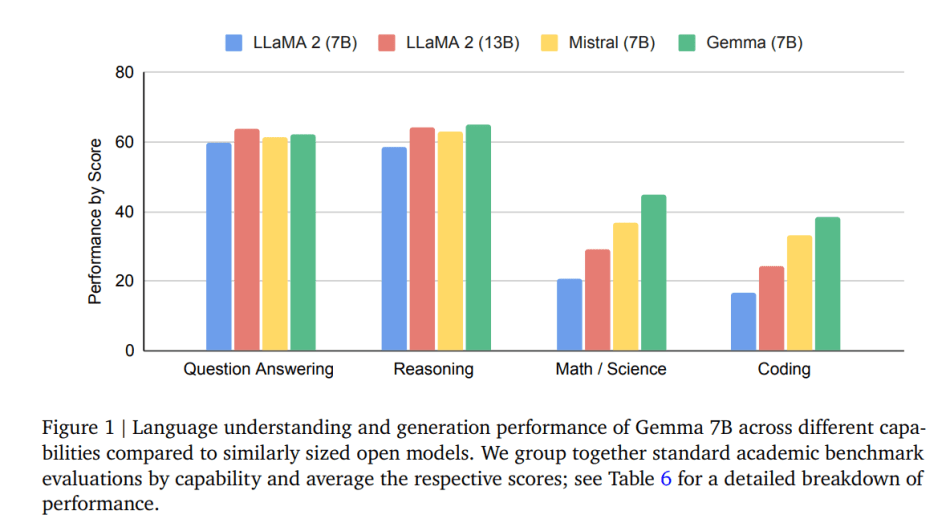

In a new paper Gemma: Open Models Based on Gemini Research and Technology, Google DeepMind Gemma Team introduces Gemma, a suite of lightweight, cutting-edge open models derived from the same research and technology underpinning the powerful Gemini models. Gemma marks a significant leap forward in performance relative to existing open models across academic benchmarks for language comprehension, reasoning, and safety.

Drawing on the foundation of the transformer decoder (Vaswani et al., 2017), Gemma’s architecture incorporates several enhancements postulated subsequent to the original transformer paper, including Multi-Query Attention, RoPE Embeddings, GeGLU Activations, and RMSNorm. These enhancements are key to its outstanding performance.

Gemma is available in two variants: a 7 billion parameter model tailored for efficient deployment and development on GPU and TPU platforms, and a 2 billion parameter model optimized for CPU and on-device applications. The Gemma models were meticulously trained on up to 6T tokens of text, employing similar architectures, datasets, and training methodologies as the esteemed Gemini model family.

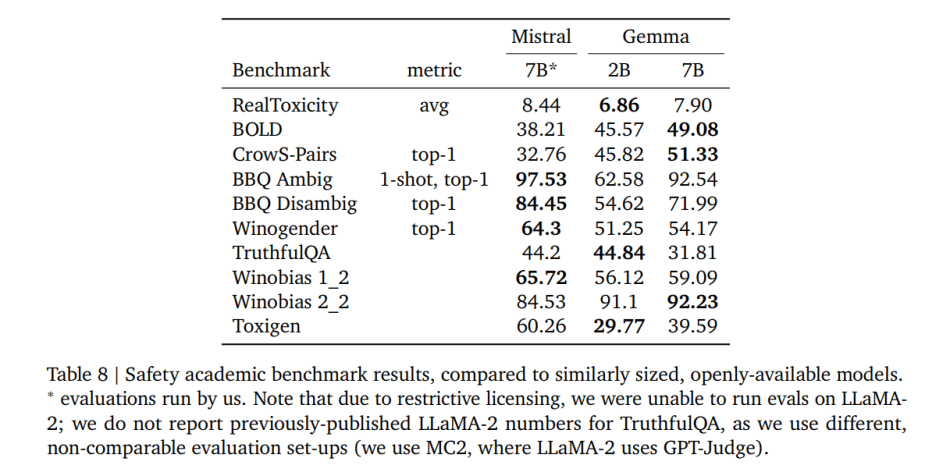

Furthermore, the researchers introduce the Responsible Generative AI Toolkit, which provides guidance and essential tools for crafting safer AI applications with Gemma. Automated techniques were applied to filter out sensitive information from training sets. Additionally, Gemma 2B and 7B were fine-tuned using supervised fine-tuning (SFT) and reinforcement learning from human feedback (RLHF), enhancing their efficacy and safety.

Gemma’s performance breakthroughs span diverse domains such as dialogue, reasoning, mathematics, and code generation, as evidenced by impressive results on benchmarks like MMLU (64.3%) and MBPP (44.4%). These achievements not only underscore Gemma’s excellence but also highlight the untapped potential within openly available LLMs.

The team anticipates that the release of Gemma into the AI development ecosystem will catalyze the creation of a myriad of beneficial applications, particularly in realms like science, education, and the arts. Moreover, the responsible deployment of Gemma holds promise in bolstering the safety of frontier models, thereby fostering the next wave of LLM innovations.

The paper Gemma: Open Models Based on Gemini Research and Technology is on arXiv.

Author: Hecate He | Editor: Chain Zhang

We know you don’t want to miss any news or research breakthroughs. Subscribe to our popular newsletter Synced Global AI Weekly to get weekly AI updates.

Pingback: DeepMind’s Gemma: Advancing AI Security and Efficiency with Open Fashions - TechTonicTales

I would like to test this AI tool, I am a big fan of Artificial Intelligence. I am using it during my work with my team and I am sure that a nice AI tool can improve workflow.