Unveiling Sora: OpenAI’s Breakthrough in Text-to-Video Generation

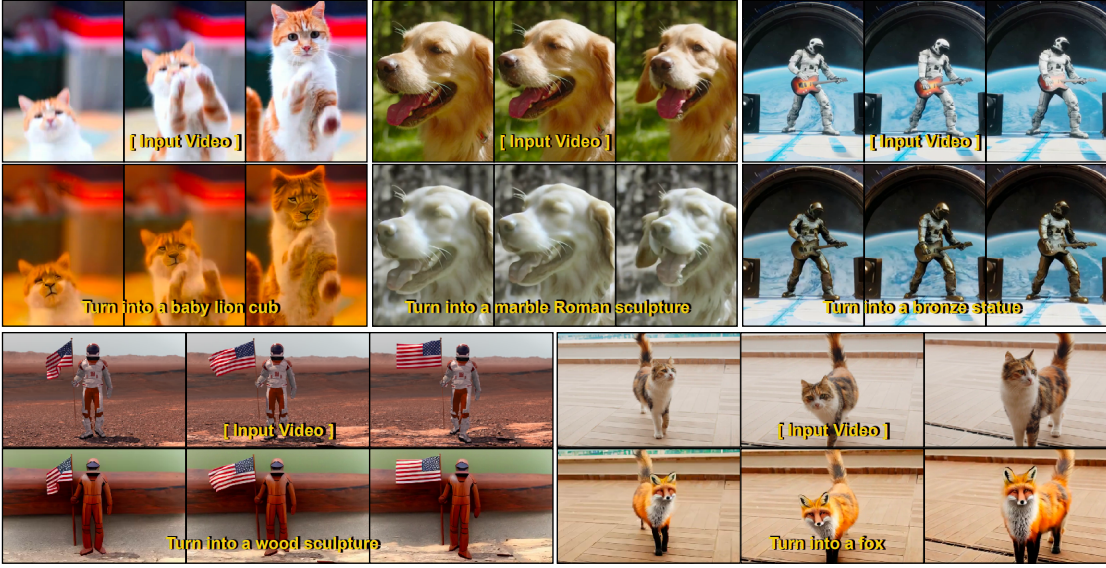

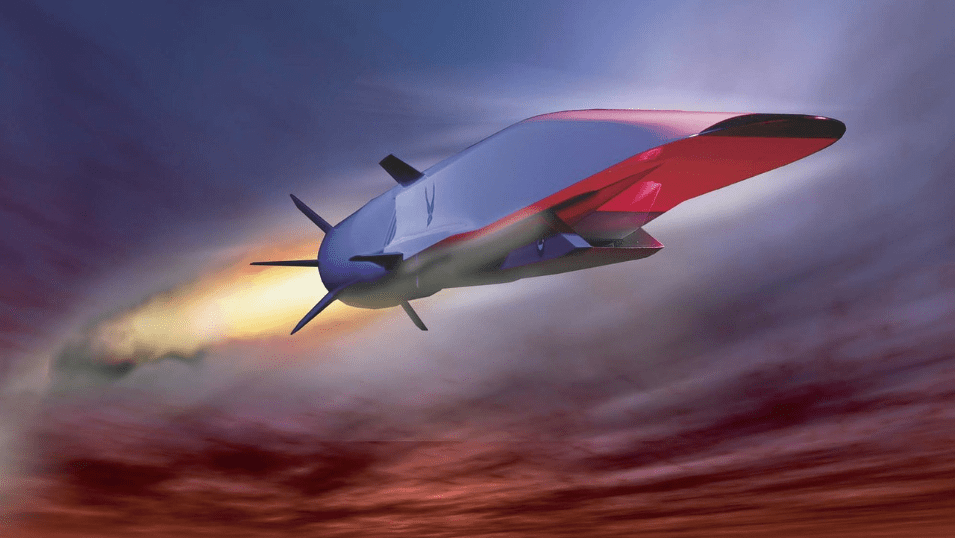

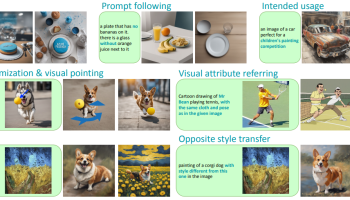

In a recent technical report, OpenAI introduces Sora, a groundbreaking text-to-video model. Sora stands out for its ability to generate videos and images spanning a wide range of durations, aspect ratios, and resolutions, producing up to a minute of high-definition video content.